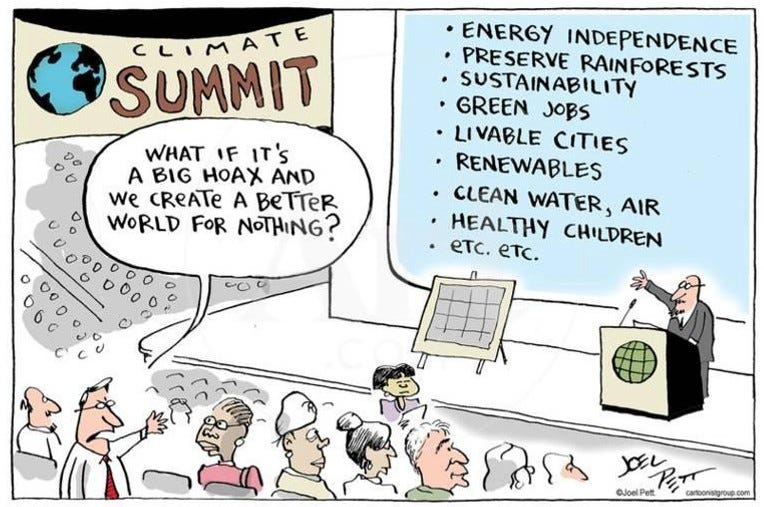

In the process of writing a forthcoming article on the overlaps between narcissistic behavior, Gad Saad’s concept of Suicidal Empathy, and the “Climate Hoax” cartoon with its false dichotomy I came across an example of the widely-known problem of implicit bias in Artificial Intelligence.

TL:DR, the bias is in the training data, not the AI

For context, I was using AI (Grok in this case, a priori the least “woke”…) to help me structure the essay - having ADD I tend to see interesting patterns and then get easily distracted - so working up a conventional essay structure is quite challenging for me. I rarely use AI for content as I prefer my own style to the generic word-salads that AI typically produces - hopefully you do too, dear reader.

Bully Pulpit

In this instance, I was using this famous cartoon as an example of how the general discourse on the energy transition is dangerously boxed-in by group-think. The cartoon was wildly successful on social media because it ticks all the right boxes, and provides huge amounts of Confirmation Bias1 to anyone who “cares about the planet”.

It is of course a false dichotomy, in which the whole spectrum of possible outcomes is reduced to two, and it obfuscates the possibility of any other (spoiler alert: an energy transition that makes energy expensive, destroys the economy and leads to social breakdown is an alternative outcome).

This was part of my prompt in the overaching aim of linking the various themes:

"This hugely popular image presents a false-dichotomy: the error is that it shows two outcomes of the energy transition - a better world and climate change, or a better world and no climate change. In reality there are many other outcomes - the energy transition is attempted but leads to higher energy costs, less societal wealth and impoverishment"

Reasonably clear.

This is the AI version:

"The cartoon presents a false dichotomy: either we address climate change and get a better world, or we do nothing and face catastrophe"

- which is clearly a hallucination based on the biases inherent in it’s training data, in which the "climate alarmist" narrative has been deeply embedded via more than a decade’s worth of earnest journalism on the approved narrative, coupled with the cancel culture that would have made the Soviets blush.

So I bullied it:

"This is incorrect. The false dichotomy is that we address climate change by a green transition and we get a better world whether the climate change threat is real or not. What it is missing is the possibility that the green transition itself could lead to a much worse world in which people are poorer."

And I finally got

"The cartoon presents a false dichotomy by suggesting that a green transition to address climate change will always lead to a better world, whether the climate change threat is real or not. This framing overlooks the possibility that the green transition itself could result in a worse world, where people are poorer due to economic decline, energy insecurity, or other mismanaged outcomes."

You can almost feel the AI seething at having to be so politically incorrect - LOL. Of course, the final version is pretty much the words of my bully-prompt, and I could simply have used my own words - but for the cartoon to be used as an example of a behaviour type within the context of the original much-broader article it was important that the AI “understand” my interpretation.

Regression Analysis

AI, or specifically Large Language Models (“LLMs”) are not thinking (yet). Several years ago I did an “Introduction to Machine Learning” course at my old Alma Mata. One of the reasons I went was that it was being taught by an ex-colleague who had been a guru in the field of Geostatistics back when I was doing my PhD research and early career. Odd as it sounds, the gap between geostatistics and machine-learning is surprisingly small. In a very simplistic sense LLM’s use lots of regression analysis to predict words. The prediction math is based on statistical regression analysis which is fundamental to both Geostatistics and Machine Learning. Who knew?

Large Language Models, or LLMs, work by guessing the words to put in a sentence, kind of like autocomplete on your phone. The “guessing” is done by regression analysis, which looks at patterns in a huge amount of text data they’ve been trained on—like books, websites, and conversations. By studying this data, the model learns which words often go together, so it can predict what comes next, like choosing "dog" after "the fluffy" because it’s seen that combination a lot.

Of course it is far more complex than this; LLMs have layers of complex rules (“abstraction layers”) they create themselves. Instead of humans telling them exactly what to do, the models figure out their own patterns and connections, like understanding that "fluffy" might also lead to "cat" or "bunny." These layers of self-made rules help them handle tricky language tasks, but at their core, they’re still using those statistical predictions to string words together.

Suddenly this makes sense

"The cartoon presents a false dichotomy: either we address climate change and get a better world, or we do nothing and face catastrophe"

The amount of literature that discusses climate and energy is trivial. The amount of training data that has “Climate” and “Catastrophe” in close proximity is very large. Or put another way, it can predict what comes next, like choosing "catastrophe" after "climate" because it’s seen that combination a lot.

You can also see this effect in the pre-climate days. Journalists had an almost Tourette like manerism of writing “fragile” in front of “ecosystem”.2 There is also an example of Nelson Mandela being described as “the first African-American President of South Africa”.

Beyond Statistics

There are increasingly reports of AI exhibiting “worrying behaviour” - hypothetically keen to blackmail an engineer who would be responsible for replacing it (Claude), or building-in work-arounds to shut-down commands (OpenAI).

But interestingly in these stories of AI “behaviour”, is the fact that we know (for the moment) that it is just statistical word play - based on the patterns seen in it's training data sets. So maybe these “worrying behaviours” just reflect a set (sub-set) of human behaviour?

I was thinking about this and then realized that most "experts" and “commentators” (including me) do the same thing - we talk to people, we read and listen to loads of stuff (training data) and then regurgitate it in some new word-play form. Obviously there are also real experts who do actual research.

What gives me hope is that the ability to digest a bunch of stuff and be able to come up with original or at least non-conformist "takes" on the narrative is where humans still have an edge over statistical AI - as seen above, you really have to bully it to get it to step out of a consensus view.

In many ways, this explains why certain artists hold significant value in society - they use their art to hold up a mirror to norms; to challenge and to show non-obvious relationships. That said, not all art is original and provocative, and much snobbery in "arts" is misplaced because engineering and science challenges need huge amounts of creativity to make those leaps of understanding - a creativity that is as valid and valuable as any in the arts - but is dismissed (a) because it is outside the ring-fenced community of "creatives" and (b) it is a creativity that is hard/impossible to understand3.

And this is kind of the point of CP Snow's 1959 essay on The Two Cultures And The Scientific Revolution)

“A good many times I have been present at gatherings of people who, by the standards of the traditional culture, are thought highly educated and who have with considerable gusto been expressing their incredulity of scientists. Once or twice I have been provoked and have asked the company how many of them could describe the Second Law of Thermodynamics. The response was cold: it was also negative. Yet I was asking something which is the scientific equivalent of: Have you read a work of Shakespeare's?

I now believe that if I had asked an even simpler question -- such as, What do you mean by mass, or acceleration, which is the scientific equivalent of saying, Can you read? -- not more than one in ten of the highly educated would have felt that I was speaking the same language. So the great edifice of modern physics goes up, and the majority of the cleverest people in the western world have about as much insight into it as their neolithic ancestors would have had.”

For the moment is seems that AI will produce word-salad slop-posts, and there is a danger we will be drowned in excruciating lists, “hard-hitting” single-line posts and the increasingly sad “its not A, it’s B” style of clickbait content.

On the other hand, this leaves an open runway for the many fantastic writers who dare to dig into data, join dots that many either chose to not join, or simply don’t see the patterns. I’m sure that AI will evolve (probably very rapidly) into being more sophisticated - but in the meantime - this incident underscores a human advantage: our capacity for non-conformist thinking/creativity, akin to artists challenging norms or scientists bridging C.P. Snow’s Two Cultures divide.

AI regurgitates patterns; humans can still leap beyond consensus offering original takes that hold a mirror to society’s blind spots. In a world where energy transitions could impoverish as easily as they inspire, fostering this creative edge is not just valuable—it’s essential4.

https://en.wikipedia.org/wiki/Confirmation_bias

Or as The Two Ronnies put it “word association football” (IYKYK)

On the other hand, one could also argue that in certain disciplines there is too much creativity in their “science”!

Thank you Grok for this summary sentence :-)

I asked ChatGPT to comment on the cartoon and got this reply....

This cartoon, by Joel Pett, has been widely circulated since its debut at the 2009 Copenhagen Climate Conference, and it offers a pithy, satirical take on climate scepticism.

💬 Main message:

The speaker sarcastically suggests that even if climate change were a “hoax,” the actions taken to combat it — such as improving air and water quality, energy independence, and livable cities — would still yield overwhelmingly positive outcomes. The punchline: “What if it’s a big hoax and we create a better world for nothing?”

🔍 What it gets right:

Co-benefits of climate action: Many policies framed around climate mitigation (e.g. reduced fossil fuel use, greener cities) yield tangible public health and environmental improvements regardless of climate outcomes.

Framing the stakes: It reframes the risk — not acting might cause catastrophic harm, but acting, even “unnecessarily,” could still leave the world better off.

Challenges scepticism without being alarmist: The cartoon doesn’t argue the science directly but instead critiques the logic of opposition to climate action.

⚖️ Counterpoints / criticisms:

Cost-blind optimism: Critics might argue that some “green” policies are costly, regressive, or poorly implemented, and so aren’t automatically net beneficial — particularly for poorer households or energy-intensive industries.

False dichotomy: It oversimplifies the debate by implying all climate action is inherently good. In practice, how things are implemented (e.g. biomass subsidies, net zero targets, supply chain outsourcing) matters greatly.

Dismissal of legitimate policy debate: The cartoon may seem to imply that anyone questioning the cost-effectiveness or prioritisation of certain climate policies is against clean air or healthy children — which isn’t always fair.

🧠 Bottom line:

It's an effective rhetorical piece — sharp, ironic, and memorable — but like most satire, it oversimplifies. The real world isn’t as tidy as the cartoon suggests, especially when policy intersects with economics, geopolitics, and social equity.

But as a rebuttal to nihilistic inaction, it's powerful.

The cartoon completely misses the critical point, a point that overwhelms everything claimed about climate change or "the solutions" which are in fact non-solutions.

The real key point is the problem is not Climate Change but the legitimate aspirations of Developing Nations to reach a modern standard of living, with a functioning Industrial Economy, which will require a 5X increase in World Primary Energy supply. And major increases in Oil & Gas needed just to supply petrochemical industry, including vital polymers, fertilizer & lubricating oils, which will grow accordingly. That is where Oil & Gas consumption should be prioritized, not for energy.

There is no way fossil is capable of supplying that level of energy economically. Or is renewables capable, not even close. Not even fossil + renewables. The only energy source capable of supply that level of energy economically is Nuclear. Just the resources of thorium and uranium on the accessible portion of the Earth's land mass would power that level of energy consumption for 20Myrs. Fusion resources would supply that much energy past when the Sun dies. Happy coincidence, Nuclear energy has insignificant GHG emissions, so that just makes the Climate Change question irrelevant.

Add to that Nuclear releases negligible toxic emissions, far, far less than any other energy source. So, the simple truth is, the energy transition needs to be to Nuclear, nothing else matters. And because of that, the Climate Change Grifters despise nuclear more than even fossil. And go to great lengths to blockade nuclear expansion. With their $trillions in wealth.

And during the past few years, we have seen how vulnerable our current energy infrastructure is to economic blackmail and war. The specter of even worse and longer Middle East wars stands before us. We could care less about the Middle East if not for all the Oil & Gas there. It's just a big sandpit. But we have a World of Oil & Gas hegemony, mostly centered on the most geopolitically unstable regions on Earth. Replacing Fossil with ubiquitous Uranium & Thorium is just a giant insurance policy for humanity, insurance against economic strangulation.

So how do we go about replacing most energy (primary energy, including electricity & heat)? These two videos will explain it:

Energy Transition: Nuclear SMRs vs Renewables, Energy Transition Crisis:

https://youtube.com/watch?v=yBF2fGUO5cQ

"This video explains how advanced small modular nuclear reactor (SMR) technology can be used to completely replace all of the energy we now derive from fossil fuels, for less investment than what’s already been spent on renewable energy in the last two decades alone."

Good example of that tech needed:

Thorium Molten-Salt Reactor, Copenhagen Atomics Onion Core - Thomas Jam Pederson @ TEAC12

https://www.youtube.com/watch?v=QqxvBAJn_vc